KubeSmart - Intelligent PaaS system for Docker-container orchestration

Purpose

KubeSmart is designed for PaaS. Key users are cloud service providers, large companies with large-scale Kubernetes infrastructure. It is a Kubernetes add-on tool in the form of K8S Custom Resource Definition (CRD) Analyzes the interaction of microservices with each other and redeploys them in such a way as to achieve the best characteristics.

Benefits

Advanced monitoring of the current state of nodes and microservices Definition of microservice relationships Visual display of interaction graphs for the user Dynamic management of container placement on nodes to optimize resources and increase reliability. The result is a reduction in hosting costs for users and hosting providers by 10-15%.

Relevance

Most modern cloud Platform as a Service (PaaS) systems are built on the basis of Docker application containerization technology. In this case, the server application (microservice) is “packed” together with all its dependencies into a Docker container, which simplifies its transfer from server to server. The servers operate in cluster mode under the control of the container orchestration system – Kubernetes (K8S). The K8S cluster includes a scheduler that distributes containers across cluster nodes (servers). The schedulers currently in use do not have intelligent algorithms for placing containers across nodes. They operate on the principle of placing in the “first suitable slot”. KubeSmart takes into account the interaction pattern of modules placed in the cloud with each other to identify their interrelations and more effectively plan the use of cloud hardware resources using machine learning methods.

Technology

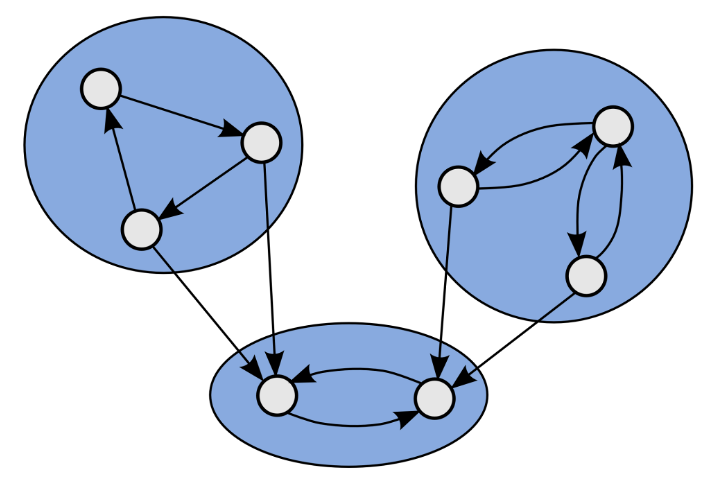

The approach proposed by KubeSmart is applicable to microservice systems, or distributed streaming and batch computing systems, in which the locality of interaction between distributed processing components (microservices, workers, etc.) plays a significant role.

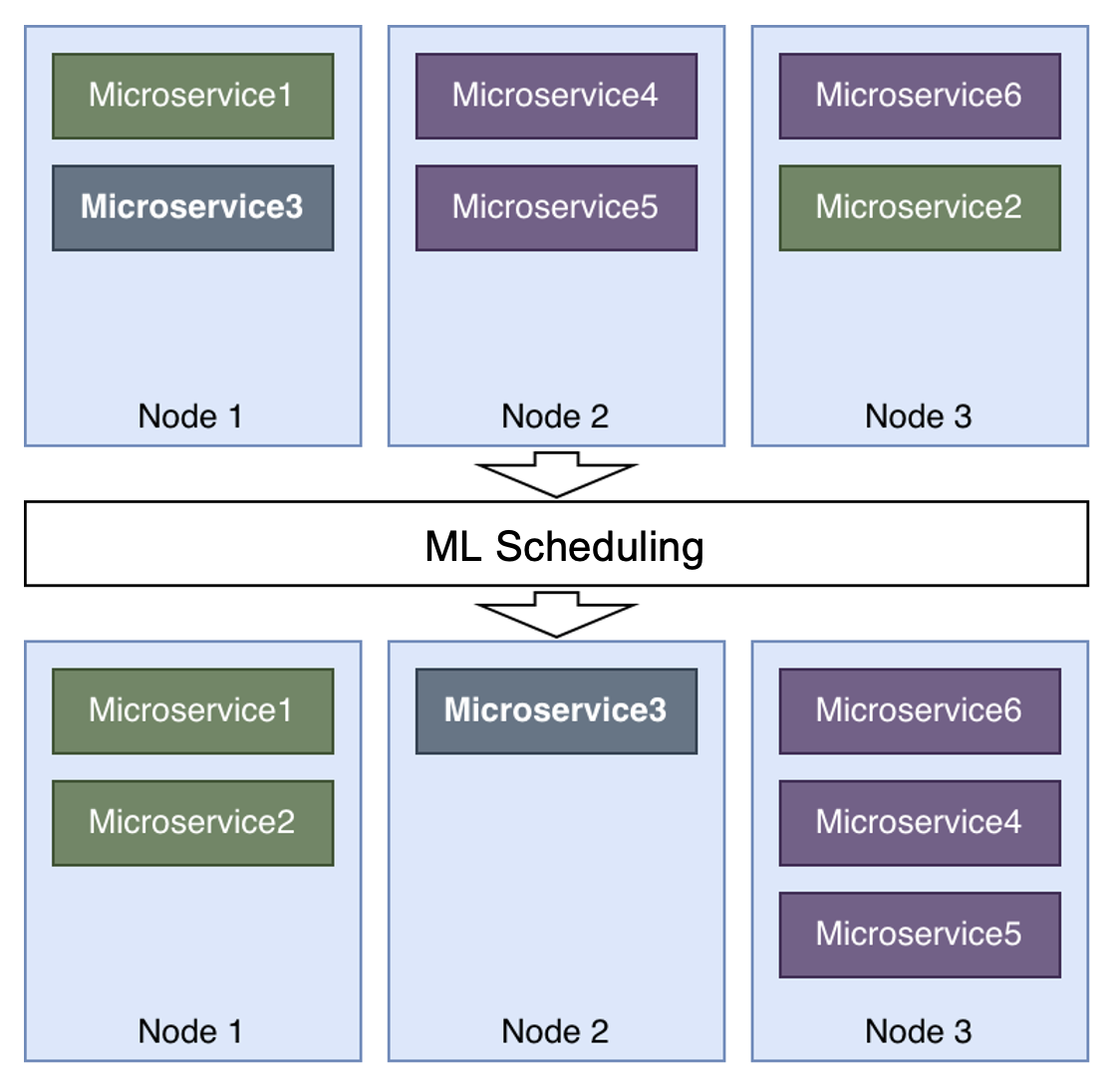

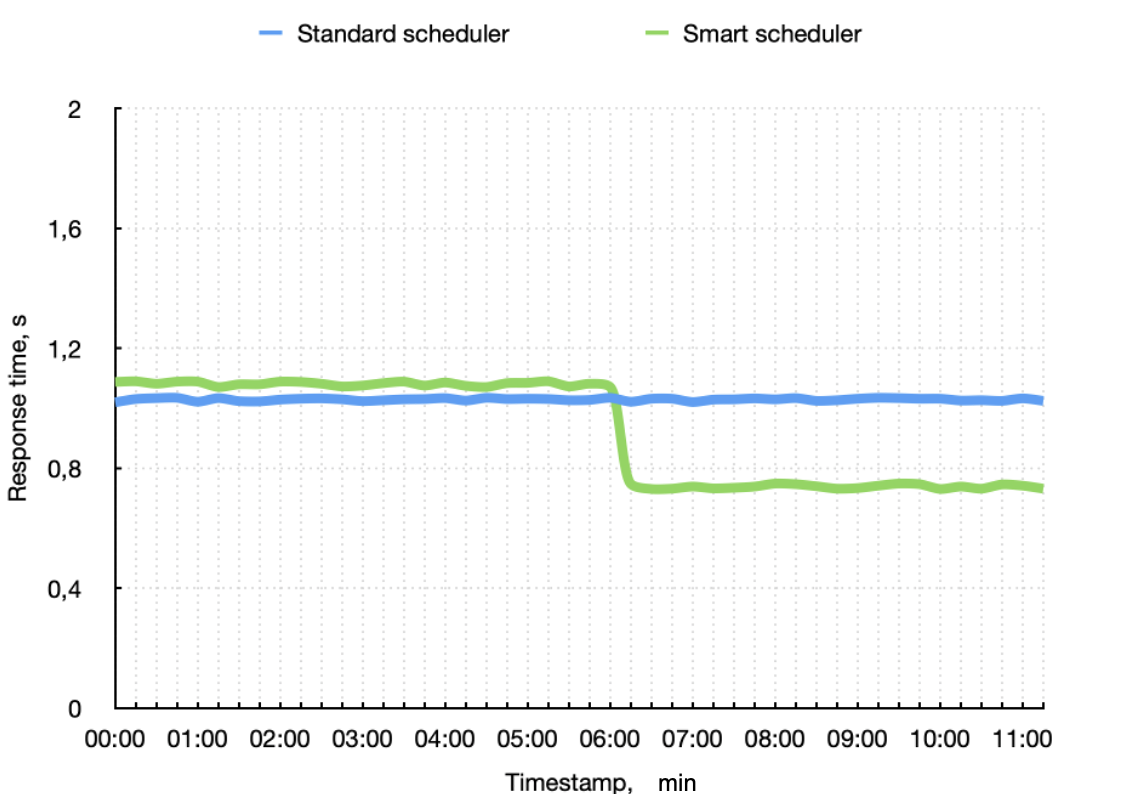

The figure shows an example of using an approach based on ML planning, as applied to microservice systems. Before starting the ML scheduler, microservices are distributed across nodes in an arbitrary manner. The ML scheduler analyzes the state of the cluster nodes and the microservices placed on them and proposes a new placement scheme. A variety of characteristics can be selected as optimization criteria: from the uniformity of temperature distribution inside the data center to the total throughput of all microservices.

The first version of KubeSmart proposes a method for improving the response time of a gateway microservice (a service that is accessed by an external user with a request, generating the execution of a chain of requests between microservices within a cluster). In this case, the ML scheduler determines which microservices most often interact with each other (form a call chain) and places them, if possible, close to each other on the same cluster nodes.

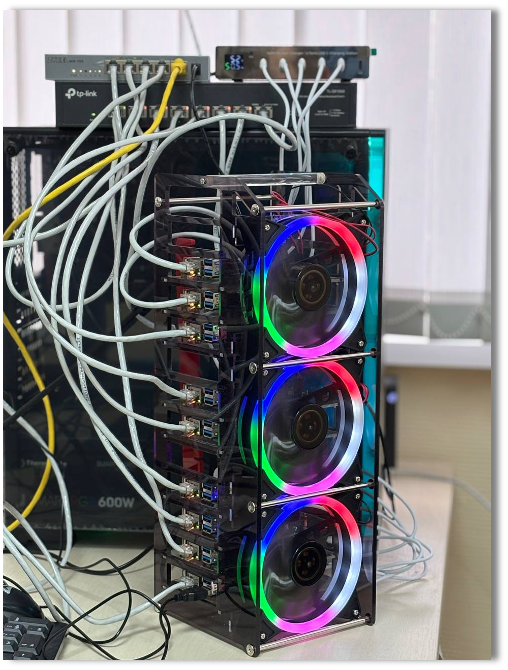

The clustering model is packaged as a Kubernetes CronJob and deployed on an experimental cluster of 10 k3s ARM nodes (shown in the figure).

Data collection is configured using the coroot eBPF framework, which collects performance metrics in Prometheus. The model runs periodically, reads metrics about the frequency of interactions between microservices within a certain sliding window, clusters microservices and writes data about the microservice belonging to a certain cluster to PostgreSQL.

The scheduler is developed in Go as a plugin for the main Kubernetes scheduler. It reads information about clusters from the database and builds a target placement picture for a specific Pod being placed. The decision is made based on how many microservices, also belonging to this cluster, are already placed on a certain node. Also, to make the system more “flexible”, a descheduler was developed that removes the Pod that deviated the most from the target placement scheme, thereby initiating its re-placement.

Using smart container placement scheduling algorithms allows achieving a performance increase of at least 10% compared to the default Kubernetes scheduling.

Technology readiness level

TRL 4: A detailed mockup of the solution has been developed to demonstrate the functionality of the technology